BashItOut

RTL SDR Frequency Drift Offset

I’ve been using the famous RTL DVB-T dongle recently as an SDR system and noticed how unstable the frequency seemed to be.

Using GQRX, the frequencies reported by the RTL-SDR are slightly off and the error changes over time, leaving it difficult to get an accurate idea of a source signal’s actual frequency.

Causes of the Errors

There’s a couple of causes for this error. One is due to manufacturing tolerances, DVB-T dongles are built down to a price and as long as the tolerances are within what’s needed for Digital TV, then normally all would be fine, but using the dongles as an SDR, we’ll need to calibrate it.

Another cause for error is thermal drift. The dongle heats up as it’s being used and (again due to the cheaper parts) the crystal oscillator changes frequency slightly with the increase in heat. This means that even if you calibrate your dongle before you start using it, a short while later it can be out of calibration again.

Calibration

There’s numerous ways to calibrate the RTL-SDR dongles, but the method I prefer is to use Kalibrate-RTL. This looks for GSM signals (which are required to be highly accurate) and uses those signals to establish the PPM offset error (PPM = Parts Per Million).

This PPM value can then be used in software like GQRX or SDRSharp as a Frequency Correction value.

To put this offset error into perspective, if I was to tune into Channel 1 of the PMR446 Frequencies (446.00625 MHz), and my RTL-SDR dongle has a PPM offset error of 40.5, I’d actually be receiving a frequency of roughly 446.02431 MHz, which is nearly PMR Channel 3 (446.03125 MHz).

Fortunately there’s a few good points about the offset error:

1) It’s linear across all frequencies.

2) The thermal drift stabilizes after the dongle has warmed up.

Offset Logging

I thought it would be useful to monitor the drift on my RTL-SDR dongle and see, not only how much it’s out by, but how much it changes over time as the thermal drift affects the oscillator crystal.

I wrote the following Bash script to continually monitor a specific GSM channel and log the PPM offset error, along with providing the time in seconds since the test was started.

#!/bin/bash

if [ $# -ne 1 ]

then

echo "Error!"

echo "Usage: $0 <channel>"

exit 1

fi

START_TIME=`date +%s`

while true

do

PPM=`kal -c$1 2>&1 | grep error | grep -Po "\d*\.\d*"`

CURRENT_TIME=`date +%s`

TIME_DELTA=`expr ${CURRENT_TIME} - ${START_TIME}`

echo "${TIME_DELTA}, ${PPM}"

doneI ran each of these tests for one hour, and on separate mornings, allowing the dongle to cool down properly overnight.

Test Results

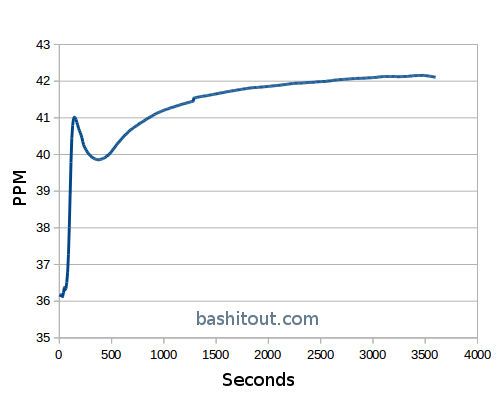

The first test shows the offset go from around 36PPM, to finally stabilizing at around 42PPM after approximately 30 minutes.

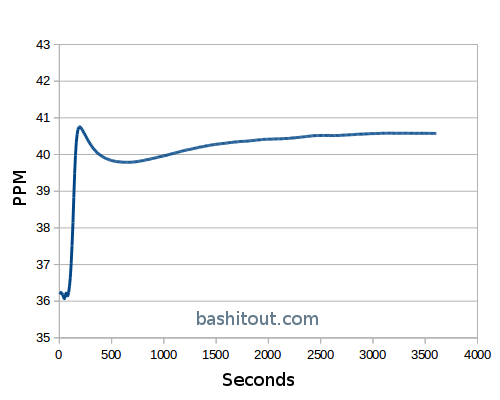

The second test again started at around 36PPM, but this time stabilized at around 40.5PPM after approximately 30 minutes. I imagine the difference here was due to different ambient room temperatures on each day.

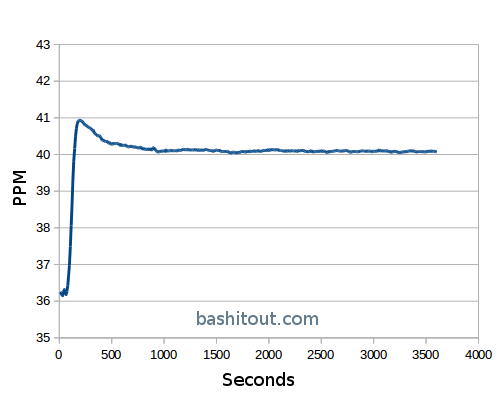

For the third test I decided to run the same calibration, but removed the plastic case from the dongle to see if it became thermally stable any quicker.

Here we go from 36PPM and stabilized to 40PPM after approximately 15 to 20 minutes.

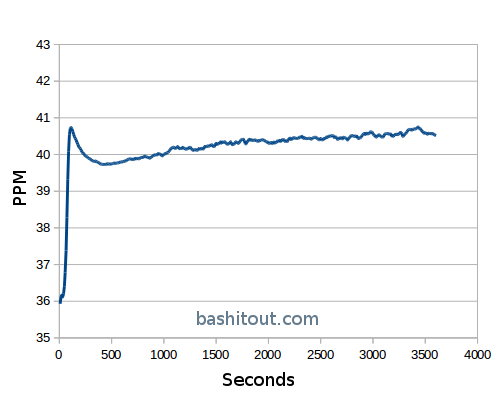

The following day I repeated the test with the dongle’s case removed and saw a shift from 36PPM to around 40.5PPM, stabilizing after approximately 30 minutes.

Conclusion

In conclusion, the following points are my main take-aways from this test:

- Calibration is important.

- The thermal stabilization time for this dongle is around 30 minutes.

- It’s good practice to calibrate before each use, to accommodate for ambient room temperatures.

- Removing the RTL-SDR’s case may provide quicker stabilization times.

Further Work

I have more RTL-SDR / DVB-T dongles on order to see how much they differ, and may do some more calibration logging, along with notes on the room temperature, to see how much that affects the stabilized offset.

Heatsinks and forced air cooling may also be an interesting experiment to try. I’d imagine that would help the dongle stabilize quicker. Oil cooling the RTL-SDR could also be an option!

I’d be interested to hear your experiences on frequency drift on these devices and if you have any other ideas to improve the calibration.

Posted on 05 April 2016. blog comments powered by Disqus

Recent Posts:

Monitoring the progress of dd

How to monitor the progress of a dd process, even after it has started.

RTL SDR Frequency Drift Offset

Monitoring frequency drift on the RTL SDR dongle

Random Seriousness with Python and Password Generation

High entropy passwords are serious business! Here some little helpers to create better ones.

Random Fun and the Busy Linux Geek

Running Linux and need to look busy quick? Try this little bit of command line fun :)

Ampersands & on the command line

Bash scripts and command line examples are often littered with ampersands. Here's what they do.